AI powers up a revolution in risk mitigation and management

If CostCo issues an urgent product recall, AI can help the retailer call nearly four million customers every hour. It’s a demonstration of the power that AI now brings to help businesses more effectively and efficiently manage what would previously have been a time-consuming and labour-intensive job. And it’s not just in handling an incident where AI has a role to play. “It’s often human error which is the clear and frequent cause of risk in product recall and general liability lines. By turning to AI to handle many of the routine tasks that are currently carried out by people, AI can significantly reduce the chances of an incident ever happening,” says Amie Townsend, Product Recall Underwriter at Hiscox London Market.

While AI can offer a range of new opportunities to manage risk, businesses and the insurance industry should also be aware of the threats that AI can pose. “Outsourcing a business’s safety approach to AI, for example, risks undermining the risk management culture of a business and even losing some of the human skill-sets necessary to identify issues that AI may not pick up, and may even compound,” says Lara Frankovic, General Liability Line Underwriter at Hiscox London Market. “The challenge for businesses is to balance the power and potential of AI without inadvertently creating new risk vulnerabilities, and for the insurance industry to work with its clients to help them safely explore the opportunities of technology.”

“Outsourcing a business’s safety approach to AI, for example, risks undermining the risk management culture of a business and even losing some of the human skill-sets necessary to identify issues that AI may not pick up, and may even compound,” says Lara Frankovic, General Liability Line Underwriter at Hiscox London Market.

AI is everywhere

AI is everywhere. Figures from the Comp TIA IT Industry Outlook 2024 estimate that more than one in five (22%) businesses ‘are aggressively pursuing the integration of AI across a wide variety of technology products and business workflows’, while a further 33% of firms ‘are engaged in limited implementation of AI’ and 45% are in the early stages of AI exploration.

In the world of product recall, AI already has a range of applications. In food safety, for example, AI is being used to gather data much more efficiently from sites like X (formally Twitter), Yelp and Google to monitor areas like the presence of food borne pathogens/illnesses and even product defects from customer complaints, says Townsend. “AI algorithms can analyse images of food, packaging and products in real time to identify abnormalities like missing labels or torn packaging, or detect incorrect temperatures, liquid levels, or humidity. That prompt detection then enables companies to identify any potential issues before a product even makes its way out of a facility.”

And, if a recall does occur, again AI can play an influential part in maximising the efficiency of the recall process. “When a recall happens, AI has been invaluable in areas of crisis management, such as identifying customers quickly, creating recall alerts, enabling mass communications, using chat boxes for customer queries, and even the logging of data,” says Townsend. In addition, AI can even prevent the need for a physical recall such as with the roll-out of over-the-air software updates.

Complex liability

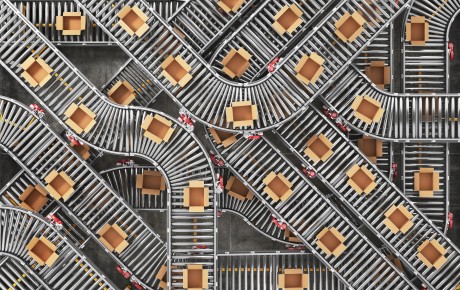

From a liability perspective, AI in hardware and software can also be a problem due to the central role it now plays in many environments such as automotive vehicles, for example, or collaborative robots (cobots) in warehouses. “AI forms the integration layer across all the other system components in a vehicle,” says Eddie Lamb, Director of Cyber Education and Advisory at Hiscox. “If the AI goes wrong, it would have wide-ranging implications not just on the individual vehicle but across a whole fleet or an entire operation. Highly autonomous systems don’t have a huge amount of human oversight and operate at great speed which means outbreaks of systemic issues from a risk point of view have the tendency to increase and could create significant liability issues.”

“AI forms the integration layer across all the other system components in a vehicle,” says Eddie Lamb, Director of Cyber Education and Advisory.

The insurance challenge

For insurers, identifying where that liability originates can be a challenge. “It is very difficult to determine where true liability sits when it comes to AI. Look at the supply chain in the AI arena where an AI programme itself could be a billion lines of code sourced from multiple vendors. Being able to work out where the fault itself arose in an AI programme and then who is liable for that introduces a huge amount of complexity into the claims arena,” says Lamb. “What if a cobot crushes someone in a warehouse, as was reported last year in Korea? Who is liable? The owner of the warehouse operation, the maker of the cobot, or the manufacturer of the component that failed inside the cobot? Or the maker of a sub-component?”

“AI is not regulated for its use and development and there are no globally recognised standards, which opens up a potential exposure,” says Lamb. Ultimately that uncertainty will be reflected in the underwriting process, adds Frankovic: “We will see claims coming out of AI and that will tighten the requirements around the information businesses need to provide when it comes to AI.”

Despite these challenges, the opportunities from AI are likely to outweigh the threats. “AI makes perfect sense technologically, economically, and operationally for businesses, and can be a powerful tool in helping to manage product recalls and reduce liability where human error can be a factor. The challenge is making sure that AI is adequately safeguarded against accidental and deliberate failure which comes down to having effective governance and control of the AI processes, and ensuring the presence of human touchpoints in the process itself,” says Lamb.